Table of Contents

- Introduction

- Factors to consider before embracing K8s

- When Kubernetes might not be a good fit

- To Kubernetes or not Kubernetes, that is not the question

- Xplenty’s move to Kubernetes

- DreamFactory’s Kubernetes Adoption

- Major Drivers of Adopting K8s

- Significant indicators that using Kubernetes will be a considerable overhauling process

- Conclusion

Great systems are not just built. They are monitored.

MetricFire is a managed observability platform that helps teams monitor production systems with clean dashboards and actionable alerts. Delivering signal, not noise. Without the operational burden of self-hosting.

Introduction

Kubernetes is now the industry standard for cloud-based organizations. Slowly, many enterprises and mid-level companies are adopting it as the default platform for managing their applications.

But we all know Kubernetes adoption has its challenges, as well as its associated costs.

How do we decide when and what to migrate to Kubernetes? Does migrating to Kubernetes mean overhauling all DevOps processes?

Adopting K8S should not lead to an overhaul of your DevOps process - it should complement it.

Kubernetes offers many benefits, such as a self-healing infrastructure, improved go-to-market time, faster release cycles, and fewer outages. Some companies need these benefits, and paying the price of a migration is worth it. In other cases, this overhaul won’t affect the business's performance.

This post will examine two examples of companies that successfully migrated specific parts of their infrastructure to Kubernetes. By doing so, they achieved functionality that directly benefited their business. In both cases, they did not entirely overhaul their DevOps processes. To easily get started with monitoring Kubernetes clusters, check out our tutorial on using the Telegraf agent as a Daemonset to forward node/pod metrics to a data source and use that data to create custom dashboards and alerts.

These stories explain “Kubernetes migration” and why people do it.

First, let’s examine the critical factors in deciding whether to migrate to Kubernetes.

Factors to consider before embracing K8s

Application Stack

Kubernetes scales very well for stateless applications and offers first-class support for them. Therefore, if you are running many web servers and proxies, Kubernetes is an ideal choice.

K8s is a good fit for applications that require tremendous scaling and expect considerable spikes in traffic. For example, if you run an e-commerce store and expect massive traffic during the holiday season, a Kubernetes-based platform will not disappoint you.

Recently, support for stateful applications has improved, with capabilities like persistent volume snapshots and restore. However, setting up stateful workloads is still tricky, and one should be careful. Sometimes, you need high database throughput, and it might be best to run them on bare metal servers instead of using VMs or containers.

DevOps Tool Kit

The DevOps tools you use are essential when adopting any new technology into the organization. Their importance increases exponentially when you try to change the underlying application hosting platform. Therefore, ensuring that your existing DevOps tools are compatible with a Kubernetes-based platform is essential.

For example, a traditional monitoring stack consisting of Zabbix or Nagios might not be handy when monitoring a Kubernetes infrastructure. Kubernetes is focused on monitoring with Prometheus, and tools like Zabbix and Nagios were created with other monitoring targets in mind.

The choice of CI/CD tooling should also be top-of-mind. Ensuring that the CI/CD infrastructure works well with containers and VMs is essential. A pipeline designed out of Jenkins and Spinnaker (or sometimes Jenkins alone) might fit well in both cases.

One thing to keep in mind before adopting any technology is that you always have to maintain an efficient DevOps practice throughout the migration. You need to maintain visibility and effect even as tools change over. This includes monitoring, log management applications, log management for infrastructure, and robust Continuous Integration (CI) / Continuous Delivery (CD) tooling.

During your Kubernetes migration, you can use MetricFire to maintain control over your DevOps processes. MetricFire offers Hosted Graphite-based monitoring, which integrates seamlessly with Kubernetes or containerized software. It also offers hosted graphite systems that easily monitor traditional VM-based infrastructure. MetricFire has a Heroku add-on called HG Heroku Monitoring, which makes it easy to monitor Heroku-based hybrid infrastructure.

With MetricFire, you can get complete insight into what’s going on during your Kubernetes migration at both the application and infrastructure level.

Projected Benefits of K8s

You will start seeing immediate benefits once you embrace Kubernetes in your ecosystem.

Self Healing

Kubernetes automatically handles QoS issues such as pod restarts or VM degradation. Therefore, such incidents should not create a flurry of alerts for your operations teams since the Kubernetes control plane manages it and ensures that pods get rescheduled or a new VM replaces a degraded VM.

Multi-cloud capability

Due to its portability, Kubernetes can host workloads running on a single cloud and distribute them across multiple clouds. It can also quickly scale its environment from one cloud to another.

These features mean that Kubernetes lends itself well to the multi-cloud strategies many businesses are pursuing today. Other orchestrators may also work with multi-cloud infrastructures, but Kubernetes arguably goes above and beyond regarding multi-cloud flexibility.

Increased developer productivity

With its declarative constructs and ops-friendly approach, Kubernetes has fundamentally changed deployment methodologies. It allows teams to use GitOps. Teams can scale and deploy faster than they ever could in the past. Instead of one deployment a month, teams can now deploy multiple times a day.

Portability and flexibility

Kubernetes works with virtually any type of container runtime. (A runtime is an application that runs containers. There are a few different options on the market today.) In addition, Kubernetes can work with virtually any underlying infrastructure, whether a public cloud, a private cloud, or an on-premises server, so long as the host operating system is some version of Linux or a recent Windows version.

In these respects, Kubernetes is highly portable because it can be used in various infrastructure and environment configurations. Most other orchestrators lack this portability; they are tied to particular runtimes or infrastructures.

However, there could be cases when Kubernetes is not a good fit for your organization. Let’s examine those cases now.

When Kubernetes might not be a good fit

While this section is shorter than the benefits section, the points discussed herein are critical. There are vital situations where Kubernetes doesn’t help.

Your tech stack is sufficient.

Perhaps you are not an engineering-focused organization, and the stack you are using is sufficient for your needs. For example, a small company sells coffee beans and other supplies using an e-commerce solution like Magento.

In such cases, your entire business does not depend on the software you are shipping/hosting. Your loads are consistent, and you do not need autoscaling. Using Kubernetes in this situation might be overkill.

You require high throughput from the underlying infrastructure

Running applications on bare metal rather than VMs or Containers is sometimes beneficial. This is usually so that you can get maximum performance. In such cases, K8s might not be worth it.

Developer Ramp Up

Kubernetes requires a significant developer and DevOps expertise to run effectively. If you can meet SLA and SLO requirements with your current systems, then adopting K8s might not even be necessary.

Your software is not containerized.

If you run your software on VMs without containerization, you are not ready for Kubernetes. Kubernetes is a container orchestrator and will only be valuable if you have containers to orchestrate. It will be tough to move from VMs directly to Kubernetes all in one go.

To Kubernetes or not Kubernetes, that is not the question

The real question is what you’re trying to achieve and why. Most companies do not use only Kubernetes or VMs. Most companies use Kubernetes in a specific area of their business to achieve results that Kubernetes is effective for.

Next, we will examine two case studies from Xplenty and DreamFactory, illustrating how these two organizations adopted Kubernetes without overhauling their DevOps process.

Xplenty’s move to Kubernetes

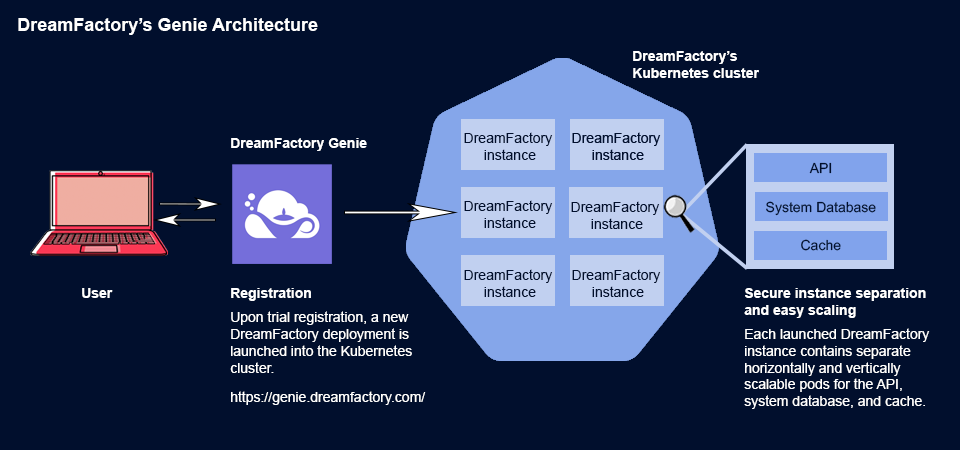

Heroku for the web app, Kubernetes for the data engine

Xplenty provides a world-class data pipeline platform to organizations from PWC and Deloitte to Accenture. Their extremely data-heavy platform requires extensive computing capabilities to serve their customers.

The Xplenty platform can be categorized into two parts:

- The user-facing web application is also where their business logic is handled. This is hosted on Heroku.

- A data engine backend that also integrates with a ton of APIs. This is on Amazon Web Services compute cloud (EC2) for hosting the data engine workloads.

Xplenty is very satisfied with Heroku’s performance in hosting the web application. This is supplemented by the fact that Heroku offers a ton of add-ons to make DevOps processes easy. Xplenty uses the Sumologic add-on for Logging and HG Heroku Monitoring to monitor their workloads.

However, managing data engines over EC2 instances was a real pain point for them. One of the significant drivers for Xplenty was the need to autoscale the data engine. With traditional EC2 instances, it took about 5-10 minutes for a new machine to come up and be ready to be used.

Therefore, the best solution was a hybrid approach where the web application would still be hosted on Heroku, but the data processing engine would be migrated to Kubernetes.

To start their journey, they moved their backend workloads to containers, which helped them build a developer experience. This helped them manage the migration process and made it not feel like an overhaul.

Then, they decided to use Kubernetes to orchestrate their workloads.

As a result, the autoscaling event that took close to 10 minutes was now happening in 20 seconds. This was a massive win for them.

An added advantage was the existing DevOps tools that Xplenty was using, namely Sumo Logic for logging and MetricFire for monitoring, which integrated very well with both the Kubernetes-based platform and their Heroku app.

Now, a small team can handle the Xplenty DevOps processes, giving them more time to care for their customers and go the extra mile on features.

In summary, choosing Kubernetes was the right move for Xplenty, but they did not overdo it by moving the entire platform on top of Kubernetes. They are still satisfied with the web app being hosted on Heroku and plan to leave it there. They got what they needed out of Kubernetes, and their migration is complete.

This is a clear example of choosing Kubernetes only if it solves key pain points. Don’t adopt the technology blindly - make carefully measured choices and use it for strategic areas of the stack.

DreamFactory’s Kubernetes Adoption

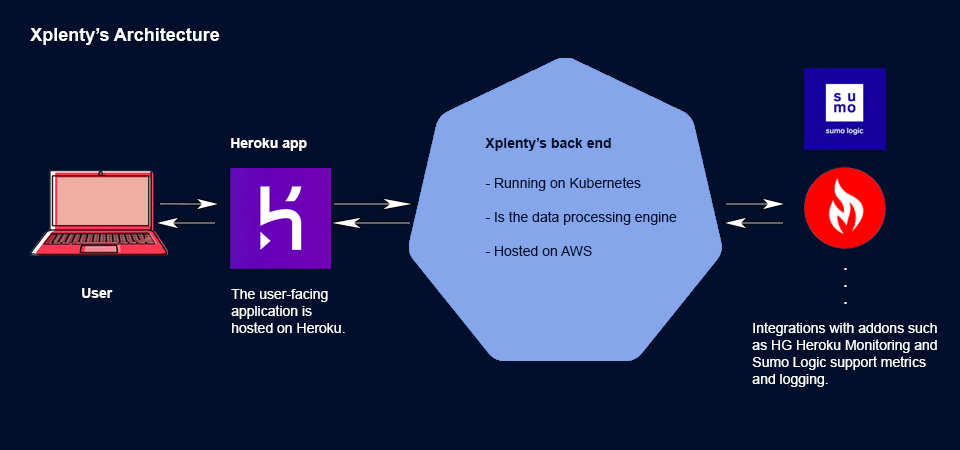

For autoscaling cloud-based free trials.

DreamFactory provides an enterprise API platform and is being used by a wide array of organizations around the globe, including banks, financial institutions, automotive manufacturers, universities, and governments at all levels. Historically, most DreamFactory deployments have been on-premise, unlike traditional SaaS products. This model provides many advantages, including easier compliance with various regulatory requirements and the ability to deploy DreamFactory using a variety of solutions, Kubernetes included.

DreamFactory expanded its Kubernetes use in 2019 by launching a hosted solution, Genie. This offering is built atop Kubernetes and allows each DreamFactory instance to be securely launched in complete isolation from other cases and scaled to suit specific customer requirements. Genie also serves as DreamFactory's trial environment, launching new instances into the cluster via a self-serve trial registration process.

Thanks to Kubernetes' ability to launch, manage, and scale hundreds of DreamFactory environments, Genie's time to market was significantly reduced because the team wasn't required to refactor the platform to support the typical multi-tenant SaaS product requirements.

Like Xplenty, monitoring plays a crucial role within DreamFactory's hosted environment. An array of Prometheus (Grafana) dashboards monitor platform health, and each customer is provided with their own dashboards for personal use.

Monitoring played a prominent role throughout the recent US Presidential election due to DreamFactory's partnership with Decision Desk HQ. Decision Desk HQ is just one of seven election reporting agencies relied upon by major US media outlets, and throughout the election, a great deal of reporting data passed through their DreamFactory-hosted environment (see this blog post for more information). Extensive monitoring was in place over this period, with the team relying heavily upon it to monitor the cluster and respond to any events.

With Dreamfactory’s example, it must be clear that Kubernetes can help scale up and down quickly. This makes it cost-effective since you are not always running at peak capacity, and it also serves as a feature for customers who need seasonal scaling.

In summary, DreamFactory's reason for using Kubernetes:

- The traditionally on-premise solution is not multi-tenant, yet they wanted to launch a hosted solution without refactoring the application code. Kubernetes made it easy to launch Dockerized DreamFactory applications into the cloud, each wholly isolated and scalable to suit specific customer needs.

- Kubernetes manages autoscaling (both up and down) to suit present hosted requirements. If trials are brisk for one week, Kubernetes scales up to manage more DreamFactory instances. For example, if trials are slow around significant holidays, Kubernetes automatically scales the environment down, ensuring we're not overspending.

- Using Kubernetes, Prometheus, and Grafana, DreamFactory can easily monitor the environment with little additional expense and overhead.

Major Drivers of Adopting K8s

There are some powerful indicators that an organization should adopt Kubernetes. If these indicators describe your organization, then migrating to Kubernetes won’t be overhauling your DevOps processes. Some of those key drivers are:

You are already using Docker.

If your organization already uses Docker to package your applications, adopting Kubernetes is the next logical step. Kubernetes will only make managing those containers extremely easy and reduce operational overhead.

Need for Autoscaling

If your application needs to scale to adjust traffic spikes, then using Kubernetes might be a good decision. This will allow you to adjust to sudden spikes in traffic while the underlying cluster scales to adjust capacity.

Frequent releases to a Production environment

If you follow an agile development cycle and need to make smaller but more frequent releases to the production environment, Kubernetes can help you achieve that.

Existing ecosystem of flexible DevOps Tools

Kubernetes works very well with many existing DevOps tools like Jenkins or Prometheus. If you already use them in your organization, Kubernetes adoption will not feel like a heavy task. If you’re using hosted monitoring tools like Hosted Prometheus or Hosted Graphite by MetricFire, you don’t need to worry about maintaining your monitoring stack when transitioning to Kubernetes.

Better Return On Investment

Kubernetes offers a better return on investment for the computing cost you pay for your applications. With the help of Kubernetes, you can pack more containers on a single host and then scale on demand. This means you don’t need to run at full capacity all the time. You can let Kubernetes do the heavy lifting of scheduling containers and scaling underlying machines when required.

Significant indicators that using Kubernetes will be a considerable overhauling process

Kubernetes is not for everyone, and it is vital to understand this. Sometimes, there could be scenarios where you would feel that adopting Kubernetes is a significant overhaul. A few indicators for those scenarios could be:

Insufficient Engineering Experience

Kubernetes is a relatively new technology, and people are still learning it. Introducing it in an organization where the engineering team lacks prior experience might throw them off course. It could even lead to time sinks, where the team spends all its time learning Kubernetes rather than building business-critical applications. This could severely cost your business.

Large Footprint of Stateful Applications

Some people agree that Kubernetes is still not ready to host stateful applications despite tremendous improvements made in that area with each release. It is indeed true that managing stateful workloads in containers is rather tricky, and if your stack has a large footprint of such workloads, then migrating to Kubernetes might be a huge undertaking.

In-compatible DevOps Tool Kit

If you have been using traditional DevOps tools like Cacti or Nagios, adopting Kubernetes and making them work will be a significant overhaul. As you must have noticed in both the Xplenty and Dreamfactory scenarios, adopting Kubernetes was easy since they used tools like MetricFire, Sumologic and Prometheus. This is not limited to monitoring or logging tools but includes the entire Continuous Integration (CI) / Continuous Delivery (CD) tooling. A DevOps tool kit plays a very crucial role in any technology adoption.

Security, Testing, and Compliance

Adopting Kubernetes means installing completely new tech on your infrastructure, which can be overwhelming. Also, Kubernetes provides a uniform view of underlying infra; therefore, you could run into compliance issues when hosting data-sensitive workloads alongside other workloads. These can be addressed by careful network segmentation and implementing correct network policies. However, that is an undertaking in itself.

Using Kubernetes means containerizing the application. Therefore, you need to change the infrastructure and the entire Test bench of the application so that it factors in the containers and Kubernetes-specific settings.

Conclusion

We hope this blog has given you enough insight into some factors to consider before adopting Kubernetes. Designing the platform to host your applications is essential and should be curated carefully. If you need help on this journey of Kubernetes adoption, feel free to reach out to us!