Table of Contents

Great systems are not just built. They are monitored.

MetricFire is the fully managed Graphite and Grafana platform for small teams that don’t want to self-host their monitoring stack. Pre-built dashboards, alerts, and native add-ons for Heroku, AWS, Azure, and GCP. All with dedicated support and no infrastructure to maintain.

Introduction

Database latency rarely starts in your app layer because it’s almost always a query doing more work than it should. Metrics tell you when that happens, but slow-query logging tells you which statement did it and how. That’s gold for tracking down missing indexes, inefficient filters, or accidental full scans. Pair the logging with a some lightweight counter metrics, and you get both an early warning and a clear path to a fix. The workflow here is intentionally small and is safe and easy to configure in your production environment, so you can have full observability into your databases.

In this guide, you’ll enable slow-query logging, make the logs readable for MetricFire's Hosted Loki integration, and collect core MariaDB metrics with Telegraf. It’s minimal, reproducible, and production-friendly.

Start a chat with us today to test MetricFire's Logging Integration for FREE. We will help you every step of the way, from collection to visualization!

Step 1: Setup MariaDB Slow Query Logging

MariaDB is a community-driven fork of MySQL created by the original MySQL engineers after it was acquired by Oracle. It remains highly compatible (same client protocol/CLI, similar SQL syntax), and the same default config paths (logs to: /var/log/mysql/). Differences do exist (storage engines, some features, versioning), but they rarely affect basic logging/monitoring setups.

Install MariaDB (ubuntu)

This article assumes you have a running instance of mariaDB, but if not, its easy to install (ubuntu example):

sudo apt-get install -y mariadb-server

sudo systemctl start mariadb || sudo systemctl start mysql

sudo systemctl enable mariadb || sudo systemctl enable mysql

sudo systemctl status mariadb || sudo systemctl status mysql

Enable Slow Queries to Output to a Log File

Confirm that you have a mysql logging directory, with the required mysql user permissions (these should already be present with mariaDB - out of the box):

ls -ld /var/log/mysql

ls -l /var/log/mysql

Now you'll need to turn on the slow log, pick a threshold (1s), optionally log non-indexed queries, and write to a predictable file that survives restarts. This command will create a small, dedicated config file with persistent slow-log settings:

sudo tee /etc/mysql/mariadb.conf.d/99-slow.cnf >/dev/null <<'EOF'

[mysqld]

slow_query_log = 1

slow_query_log_file = /var/log/mysql/slow.log

long_query_time = 1

log_queries_not_using_indexes = 1 # optional - can be noisy in some workloads

EOF

Restart the service:

sudo systemctl restart mariadb || sudo systemctl restart mysql

Example Slow Query

This basic query generates a guaranteed slow entry (with a comment tag):

sudo mysql -e "SELECT SLEEP(2) AS delay_sec, 'mariadb test slow query' AS tag;"

Confirm that the statement printed to your log file (you should see an entry including Query_time: 2.000 and your comment):

sudo tail -n 50 /var/log/mysql/slow.log

Example Index Miss Query

An index miss happens when the optimizer can’t use an index for your filter or sort, forcing a full table scan). Full scans examine many more rows, driving up CPU/I/O and latency, and they scale poorly under load. Monitoring index misses catches these patterns early because slow-query and log_queries_not_using_indexes logs show the offending SQL Fixes usually involve adding the right index or rewriting predicates, which can dramatically cut response times. This query will create a demo database and force an index miss:

sudo mysql -e "

CREATE DATABASE IF NOT EXISTS demo;

CREATE TABLE IF NOT EXISTS demo.notes (id INT PRIMARY KEY, body TEXT);

INSERT INTO demo.notes VALUES (1, REPEAT('x',1000))

ON DUPLICATE KEY UPDATE body=VALUES(body);

SELECT 'mariadb test index miss' AS tag, SLEEP(2) AS delay_sec, COUNT(*) AS matches

FROM demo.notes WHERE body LIKE '%x%';

"

Locate the index miss in your log file:

sudo tail -n 50 /var/log/mysql/slow.log | grep -F "mariadb test index miss"

Step 2: Install and Configure Promtail to Collect System Logs

We support log collection via Promtail, OpenTelemetry Contrib, and Alloy. In this example, we'll detail how to configure Promtail since it is a simple and official log shipping agent for Grafana Loki. It runs as a lightweight binary that tails log files (like /var/log/*) and forwards them to our Hosted Loki endpoint over HTTP.

Install/unpack Promtail (Ubuntu)

wget https://github.com/grafana/loki/releases/download/v3.5.3/promtail_3.5.3_amd64.deb

sudo apt-get install -y ./promtail_3.5.3_amd64.deb

Configure Promtail to Forward Logs

Update your Promtail configuration file at /etc/promtail/config.yml to include the following:

server:

http_listen_port: 9080

grpc_listen_port: 0

positions:

filename: /tmp/positions.yaml

clients:

- url: https://<YOUR-API-KEY>@www.hostedgraphite.com/logs/sink

scrape_configs:

- job_name: mariadb-slow

static_configs:

- targets:

- localhost

labels:

host: <HOST-NAME>

job: mariadb-slow

__path__: /var/log/mysql/slow.log

NOTE: Make sure to replace YOUR-API-KEY and HOST-NAME in the above config and restart the Promtail service:

sudo systemctl restart promtail

sudo systemctl status promtail

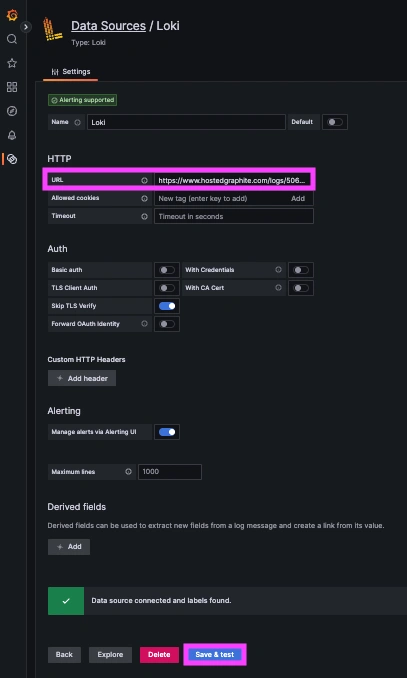

Step 3: Create a Loki Data Source in our Hosted Grafana

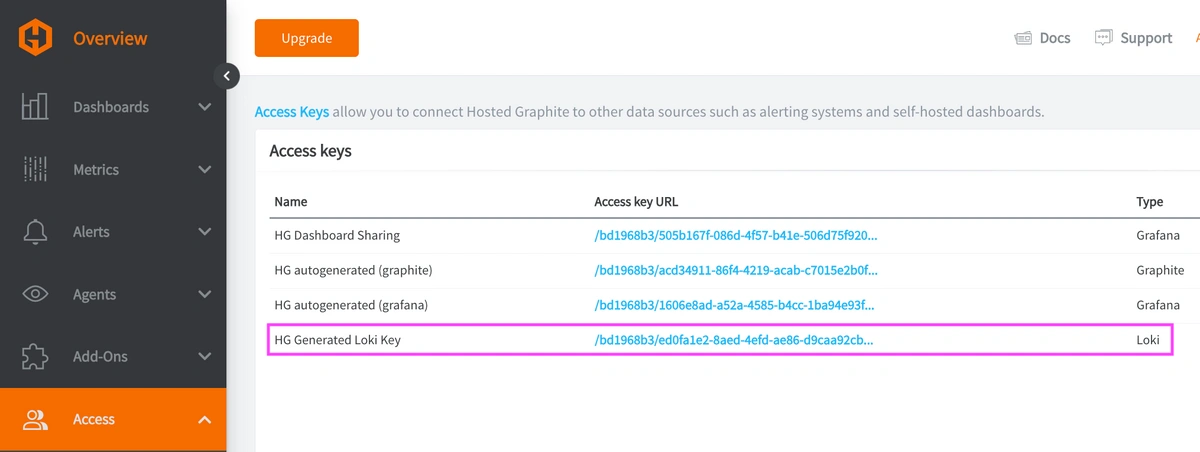

REACH OUT TO US about trying our new Logging feature for FREE, and we will create a Loki Access Key in your Hosted Graphite account. If you don't already have a Hosted Graphite account, sign up for a free trial here to obtain a Hosted Graphite API key and Loki Access Key.

Now within the Hosted Graphite UI, you can navigate to Dashboards => Settings => Data sources => Add New Data source (Loki). You'll be able to add the URL for your HG Loki endpoint, which includes your new Loki Access Key: https://www.hostedgraphite.com/logs/<UID>/<LOKI-ACCESS-KEY>

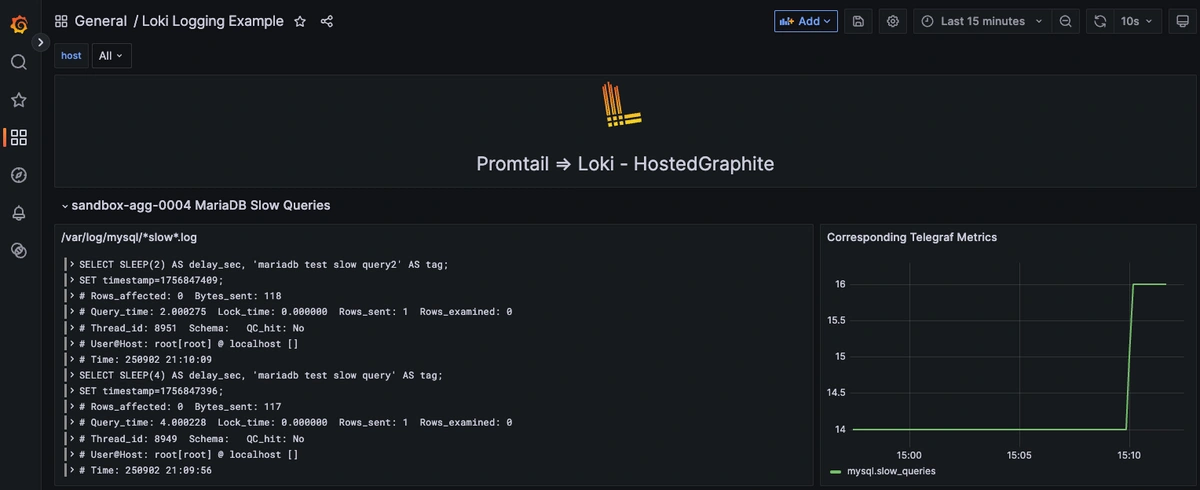

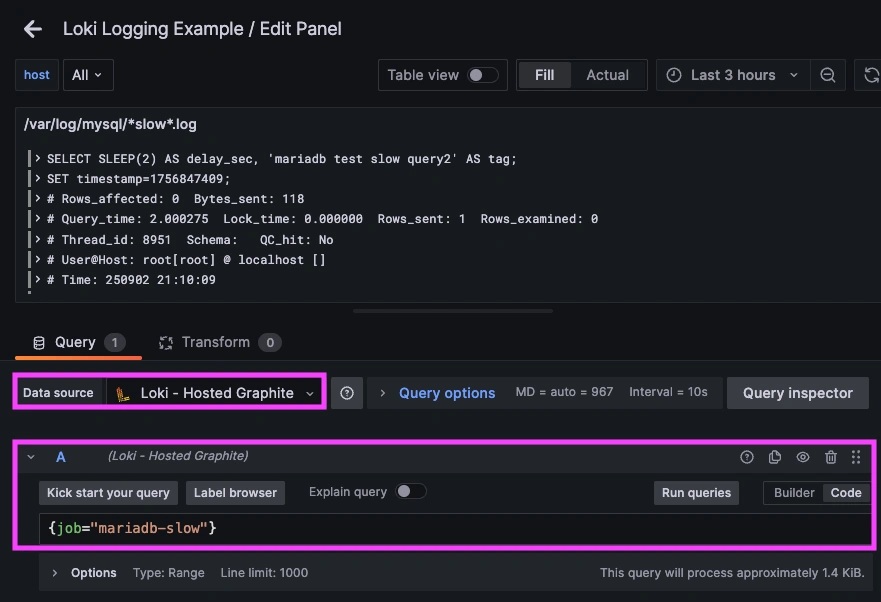

Step 4: Visualize the Slow Ops Logs

Once logs are forwarded to our Loki endpoint and the data source is connected in your Hosted Grafana, you can create a new dashboard panel, select Loki as your Data source, and format a query using 'code mode'.

Example Loki query: {job="mariadb-slow"}

Now you can send more example ops that take > 1s seconds to complete, and see them appear immediately on your dashboard!

Step 5: Configure Telegraf to Send Corresponding Metrics

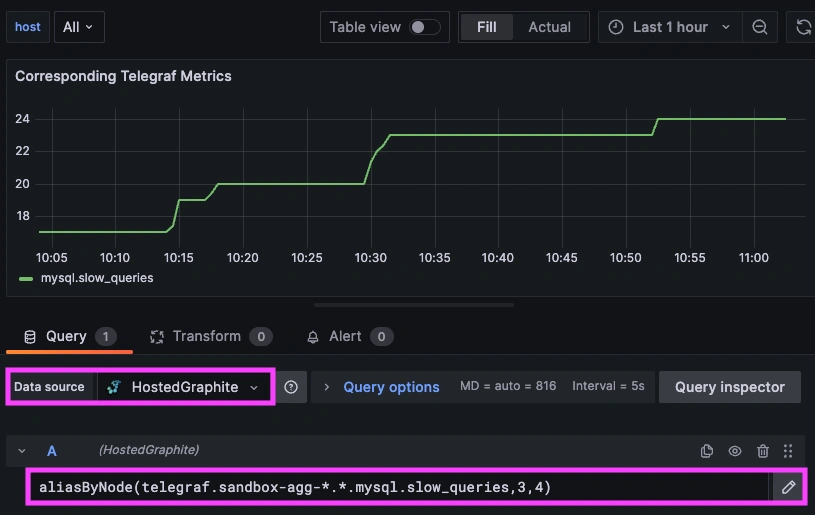

Use Telegraf's MySQL input plugin to scrape lightweight counter stats so you can graph trends alongside your slow logs.

Setup the Telegraf Collector

If you don't already have an instance of Telegraf running in your server, install our handy HG-CLI tool to quickly install/configure Telegraf:

curl -s "https://www.hostedgraphite.com/scripts/hg-cli/installer/" | sudo sh

NOTE: You will need to input your Hosted Graphite API key, and follow the prompts to select which metric sets you want.

Configure the MySQL Telegraf Input Plugin

Once Telegraf is installed, open its configuration file at: /etc/telegraf/telegraf.conf and add the following section:

[[inputs.mysql]]

servers = ["telegraf:STRONG_PASS_HERE@unix(/var/run/mysqld/mysqld.sock)/?tls=false"]

metric_version = 2

# fieldinclude = ["slow_queries"] ## collect ONLY the slow query metric

Create a Telegraf Read Only User For MySQL

Telegraf’s MySQL/MariaDB input only needs to read server status and metadata. Create a local user that can connect via the server’s Unix socket and read the counters that Telegraf scrapes:

sudo mysql -e "CREATE USER IF NOT EXISTS 'telegraf'@'localhost' IDENTIFIED BY 'STRONG_PASS_HERE';

GRANT PROCESS, REPLICATION CLIENT, SELECT ON *.* TO 'telegraf'@'localhost';

FLUSH PRIVILEGES;"

Ship Performance Metrics to Hosted Graphite

Simply save your updated conf file, and restart the Telegraf service to forward the mysql/mariadb metrics to your HG account. Or run it manually to inspect the output for potential syntax/permission errors:

telegraf --config /etc/telegraf/telegraf.conf

Once these metrics hit your Hosted Graphite account, you can use them to easily create custom dashboards and alerts!

Conclusion

Slow query log gives you the exact SQL behind slow downs, while metrics are lightweight signals you can alert on without parsing logs. After you add an index or tweak a query, you’ll see fewer slow-log entries and a flatter slow-queries curve, confirming the fix. This setup is easy and production-safe because it survives reboots and avoids high-cardinality labels/metrics. The big picture is that logs tell you why your DB is slow and metrics tell you how often, so you can spot a spike and quickly locate the offending SQL to implement a fix.

Ship logs alongside metrics and you’ll fix performance regressions with speed and confidence. Instead of jumping between tools or manually inspecting your server logs, you can correlate a spike in metrics with the exact log line that makes sense, all in a single dashboard.

MetricFire's Hosted Loki logging integration is quick to set up but powerful in practice. Whether you're tracking security threats, service restarts, failed jobs, or kernel anomalies, it gives you the visibility you need to stay ahead of problems and reduce the time it takes to resolve them.

Reach out to the MetricFire team today and let’s build something great together!