Table of Contents

Introduction

Increasing container adoption presents plenty of difficult choices. We need to think about what is the best orchestration tool for our situation, as well as how we will monitor the system. Docker is the standard for container runtimes, but there are multiple options to choose among container orchestration tools. The leaders among these are Amazon's Elastic Container Service and CNCF's Kubernetes. In fact a survey cites 83% of organizations use Kubernetes as their container orchestration solution vs. 24% for ECS.

Here at MetricFire, our community members are coming to us with questions about both Kubernetes and AWS ECS. From a monitoring perspective, Grafana and Prometheus lean themselves heavily towards Kubernetes, with a lot of available Kubernetes support, but there remains an argument for using AWS ECS. If you get onto our platform with our free trial, you can start playing around with metrics sent from both orchestration platforms.

This article will compare and contrast ECS and Kubernetes to help readers decide which one to use.

Benefits of using Kubernetes for Container Orchestration

- No lock-in, fully open source: Kubernetes can be used both on-premise or in the cloud without having to re-architect your container orchestration strategy. The software is fully open source and can be redeployed without incurring traditional software licensing fees. You can also have Kubernetes clusters running across both public and private clouds thereby providing a layer of virtualization among public and private resources.

- Powerful flexibility: In addition, if you have an important revenue-generating application, Kubernetes is a great way to meet high availability requirements, without sacrificing the need for efficiency and scalability. With Kubernetes, you have granular control over how your workloads can scale. This allows you to avoid vendor lock-in with ECS or other container services when you need to make the switch to a more powerful platform.

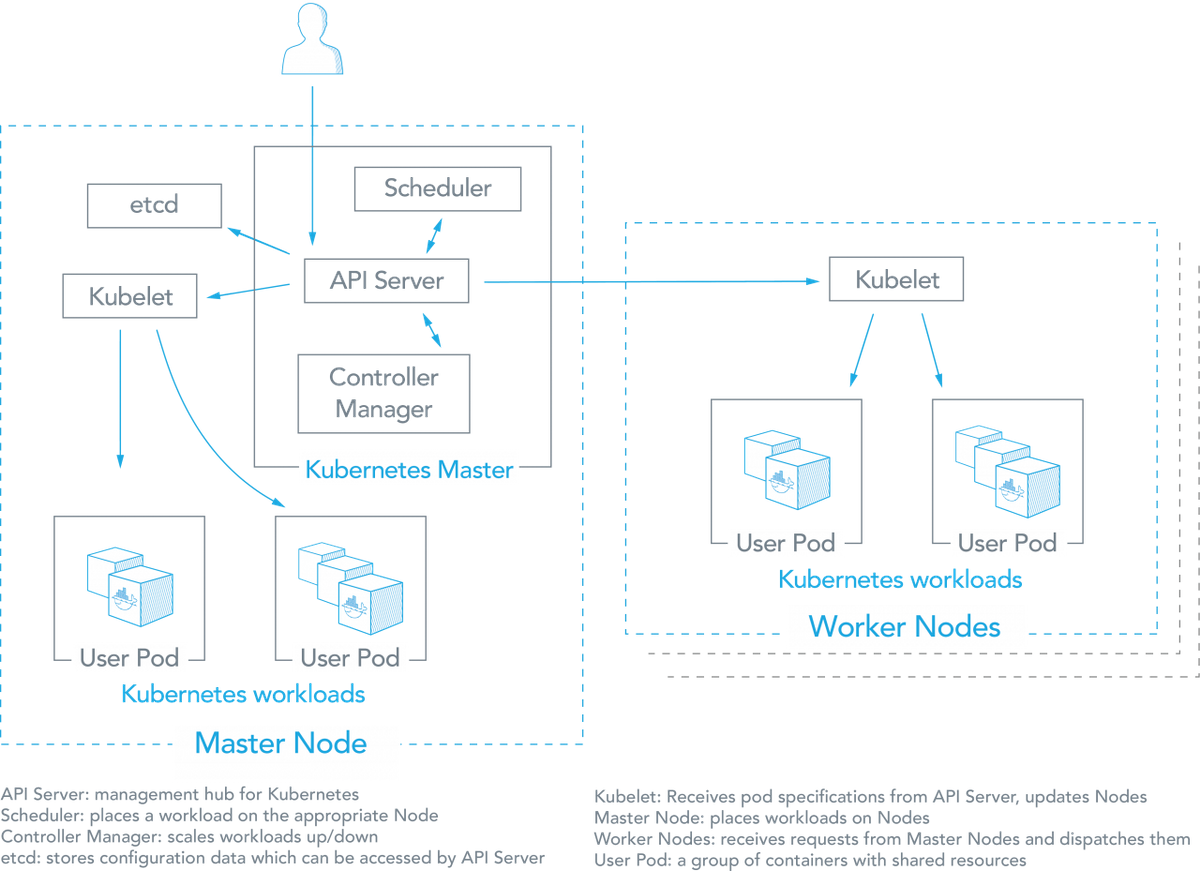

- High availability: Kubernetes is designed to tackle the availability of both applications and infrastructure, making it indispensable when deploying containers in production:

- Health checks and self-healing: Kubernetes guards your containerised application against failures by constantly checking the health of nodes and containers. Kubernetes also offers self-healing and auto-replacement: if a container or pod crashes due to an error, Kubernetes has got you covered.

- Traffic routing and load balancing: traffic routing sends requests to the appropriate containers. Kubernetes also comes in with built-in load balancers to distribute your load across multiple pods, enabling you to (re)balance resources quickly in order to respond to outages, peak or incidental traffic and batch processing. It's also possible to use external load balancers.

- Workload Scalability: Kubernetes is known to be efficient in its use of infrastructure resources and offers several useful features for scaling purposes:

- Horizontal infrastructure scaling: Kubernetes operates at the individual server level to implement horizontal scaling. New servers can be added or removed easily.

- Auto-scaling: With auto-scaling you can automatically change the number of running containers, based on CPU utilisation or other application-provided metrics.

- Manual scaling: You can manually scale the number of running containers through a command or the interface.

- Replication controller: The Replication controller makes sure your cluster has a specified number of equivalent pods (a group of containers) running. If there are too many pods, the Replication Controller terminates the extra pods. If there are too few, it starts more pods.

- Designed for deployment: One of the main benefits of containerization is the ability to speed up the process of building, testing, and releasing software. Kubernetes is designed for deployment, and offers several useful features:

- Automated rollouts and rollbacks: Want to roll-out a new version of your app or update its configuration? Kubernetes will handle it for you without downtime, while monitoring the containers' health during the roll-out. In case of failure, it automatically rolls back.

- Canary Deployments: Canary deployments enable you to test the new deployment in production in parallel with the previous version, before scaling up the new deployment and simultaneously scaling down the previous deployment.

- Programming languages and frameworks support: Kubernetes supports a wide spectrum of programming languages and frameworks like Java, Go, .Net, etc. Kubernetes also has a lot of support from the development community, who maintain additional programming languages and frameworks. If an application can run in a container, it should run well on Kubernetes.

- Service Discoverability: It's important that all services have a predictable way of communicating with one another. However, within Kubernetes, containers are created and destroyed many times over, so a particular service may not exist permanently at a particular location. This traditionally meant that some kind of service registry would need to be created or adapted to the application logic to keep track of each container's location. Kubernetes has a native service concept which groups your Pods and simplifies service discovery. Kubernetes will provide IP addresses for each Pod, assign a DNS name for each set of Pods, and then load-balance the traffic to the Pods in a set. This creates an environment where the service discovery can be abstracted away from the container level.

- Network Policy as a part of Application Deployment: By default, all Pods in Kubernetes can communicate with each other. A cluster administrator can declaratively apply networking policies, and these policies can restrict access to certain Pods or Namespaces. Basic network policy restrictions can be enforced by simply providing the name of Pods or Namespaces that you would like to give certain Pods egress and ingress capabilities too.

- Long term solution: Given the rise of Kubernetes, many cloud providers are shifting their R&D focus to expanding their managed Kubernetes services over legacy options. Consider Kubernetes as you develop your long term IT strategy.

- Ongoing development: Soon after its first release, Kubernetes gained a very large and active community. With about 2000 Github contributors at the moment, varying from engineers working at fortune 500 companies to individual developers and engineers, new features are being released constantly. The large and diverse user community also steps in to answer questions and foster collaboration.

- Vibrant community: Kubernetes is an active community with a breadth of modular, open source extensions that have the backing of major companies and institutions like the CNCF. Several thousand developers and many large corporations contribute to Kubernetes, making it the platform of choice for modern software infrastructure. This also means that the community is not only collaborating actively but also building out features to solve modern problems easily.

Benefits of using AWS Elastic Container Service

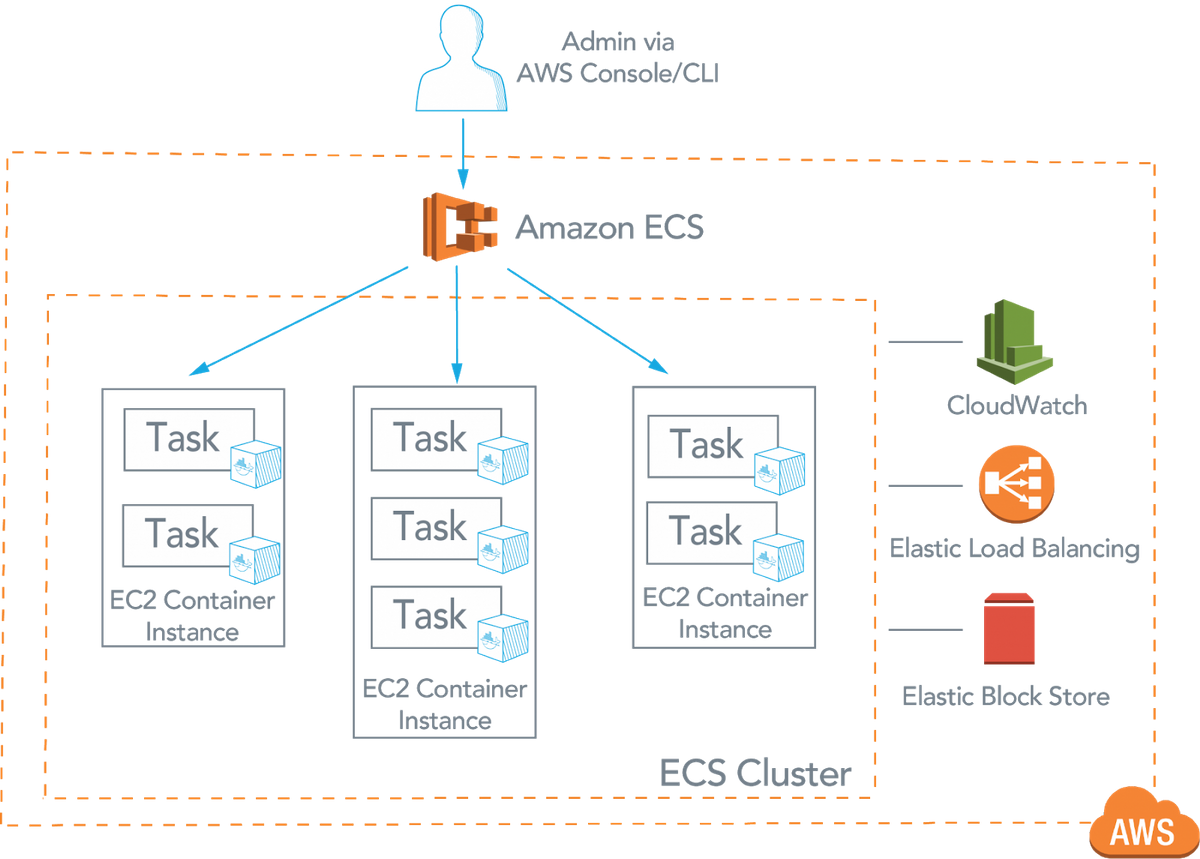

- Traditional ECS: powered by Amazon EC2 compute - was launched in 2015 as a way to easily run Docker containers on the cloud. Traditional ECS gives you underlying control over the EC2 compute options for your containers. This flexibility means you can select the instance types to run your workloads on. It also hooks you into other AWS services used for monitoring and logging activity on those EC2 instances.

- Fargate ECS: on the other hand, was released in 2017 as a way to run containers without having to manage the underlying EC2 compute. Instead, Fargate automatically calculates the desired CPU and memory requirements. Fargate is usually a good option if you need to get a workload up and running quickly and don't want to bother calculating or figuring out the underlying compute options.

- Good for small workloads: If you plan to run smaller workloads which are not expected to scale tremendously then ECS is a good choice. The task definitions are easier to register and understand.

- Less complex application architectures: If you have an application which is composed of only a few microservices, which more or less work independently, and the overall architecture is not very complex (meaning it doesn't have many external dependencies or too many moving parts), then ECS is a good candidate to start with.

- Easier learning curve: Kubernetes does come with a steep learning curve and that is the primary reason Hosted kubernetes offerings are a success as compared to more traditional Kubeadm and KOPS flavours. Moreover, with offerings like ECS fargate you need not even worry about the underlying host. AWS takes care of everything.

- Easier monitoring and logging: ECS seamlessly integrates with AWS Cloudwatch monitoring and logging. No extra work is needed to configure visibility into your container workloads if you are running them on ECS.

Challenges for Kubernetes Adoption

- Getting a grasp on the Kubernetes landscape: Understanding the Kubernetes landscape is a critical component to getting started since you need to include a variety of other technologies and services to deliver an end-to-end solution. But the state of each supplemental technology varies significantly. For instance, some solutions date back to the days when Unix reigned supreme, and other solutions are less than a year old with low commercial adoption and support. So, in addition to figuring out which ones you can safely include in your implementation, you need to understand how each component fits into a larger solution. While you can find plenty of information and documentation about this, it's scattered and tough to distill. As a result, it's difficult to figure out the best solution for a particular job. Even once you nail down the solutions you'll use, you need a solid plan around how to deliver these as-a-service and manage them on an ongoing basis.

- Understanding the difference between features and projects: The challenges don't end there. While it's helpful (yet difficult) to find advice on how to manage the lifecycle of a project, it doesn't resolve the confusion that arises when it comes to distinguishing between a Kubernetes feature and a Kubernetes community project. The beauty of an open-source technology, like Kubernetes, is that the community of users can create and share innovative uses. However, that same benefit can also muddy the waters. While special interest groups can develop features that are added to core Kubernetes, other standalone projects remain just that--outside of the core. Projects contributed by an individual developer or vendor might not be ready for prime time. In fact, many are in different stages of development. (Note: If it isn't in GitHub, it's not an official Kubernetes feature.)

- Becoming knowledgeable on more than Kubernetes: All this confusion is compounded by the complexity of delivering solutions. Kubernetes itself is sophisticated. But organizations also want to deliver other elaborate solutions-like distributed data stores-as-a-service. Combining and managing all these services can further amplify the challenges. Not only do you need to be an expert in Kubernetes, you need to be skilled in everything you're going to deliver as part of an end-to-end service.

- Kubernetes management is difficult: Going live with Kubernetes is one thing, managing it is another. Kubernetes management is a largely manual exercise because all you get with Kubernetes is Kubernetes. The platform doesn't come with anything to run it, so you need to figure out how to deliver resources to Kubernetes itself no easy feat.

When it comes to serving the needs of the enterprise, you'll need to security-harden Kubernetes and integrate it with your existing infrastructure. Besides handling upgrades, patches, and other infrastructure-specific management tasks, you'll need the right knowledge, expertise, processes, and tools to operate and scale Kubernetes effectively. Consider that in order to deliver Kubernetes to different lines of business and different users as-a-service, you need to resolve the following management challenges:

- Multiple Kubernetes clusters are difficult to manage: Managing multiple Kubernetes clusters puts more pressure on your internal teams, requiring a big time and resource investment.

- Monitoring and troubleshooting Kubernetes is difficult: As with any complex system, things can and do break. With Kubernetes you can't test at scale, it's difficult to fix if something goes wrong, and you can't automate upgrades.

- Difficult to support at scale: For an enterprise organization, scale is critical. But it's tough to support scale with out-of-the-box Kubernetes. Versioning multiple clusters is time consuming and difficult, and requires special resource planning. Plus, in order to truly scale the platform, you need to manually run configuration scripts.

Conclusion

It should be very clear by now that Kubernetes, although with some shortcomings, is still leading in the race for container orchestration - and not only that, it is the clear winner. Kubernetes has become the defacto standard for container orchestration and both large and small organizations are heavily investing resources to adopt it.

Amazon ECS, although a good option, falls short at a lot of places. With the right tool chain, Kubernetes adoption is not only seamless but also beneficial for the future as it makes you truly cloud native. We offer a ton of solutions which makes observability of Kuberentes clusters and the deployed workloads extremely easy. Feel free to reach out for all your monitoring and logging needs and we will be happy to help. Just book a demo for a video call, or sign on to our free trial, where you can talk to us directly through our customer support chat box.