Table of Contents

- Kubernetes for IoT Metrics Load Balancing

- Setting Up Kubernetes for IoT Metrics Load Balancing

- Kubernetes Service Discovery | Load Balancing | Networking

- Implementing Autoscaling for IoT Metrics Pods

- Advanced Load Balancing Techniques for Multi-Cloud IoT Metrics

- Integrating MetricFire for IoT Metrics Monitoring

- Testing and Optimizing IoT Metrics Load Balancing

- Conclusion

- FAQs

Great systems are not just built. They are monitored.

MetricFire is a managed observability platform that helps teams monitor production systems with clean dashboards and actionable alerts. Delivering signal, not noise. Without the operational burden of self-hosting.

Kubernetes for IoT Metrics Load Balancing

Kubernetes struggles with efficiently balancing IoT metrics in multi-cloud environments due to its default static load balancing and lack of proximity-based routing. This article outlines how to tackle these challenges by:

- Leveraging edge computing to reduce latency by processing metrics closer to their source.

- Configuring Kubernetes services (e.g., LoadBalancer with

externalTrafficPolicy: Local) for IoT-specific needs. - Implementing Horizontal Pod Autoscaler (HPA) with custom IoT metrics for dynamic scaling.

- Using advanced tools like Istio for intelligent, multi-cloud traffic routing.

- Monitoring performance with MetricFire, combining Prometheus and Grafana to track metrics and set alerts.

Whether you're managing thousands of devices or optimizing multi-cloud setups, these strategies can ensure efficient, low-latency IoT metric handling.

Setting Up Kubernetes for IoT Metrics Load Balancing

Prerequisites for Kubernetes Configuration

To start balancing IoT metrics, you'll need a Kubernetes cluster with at least two nodes. This setup ensures you can effectively test both load balancing and high availability. You can choose from managed services like Google Kubernetes Engine (GKE) or Amazon Elastic Kubernetes Service (EKS) for ease of setup, or use Minikube locally if you're working on development.

A Metrics Server is crucial for autoscaling. It only requires 1m CPU and 2 MB of memory per node, supporting clusters of up to 5,000 nodes. For production environments, deploy the Metrics Server in high-availability mode with two nodes. Also, enable --enable-aggregator-routing=true on the kube-apiserver to enhance its functionality.

To simulate realistic IoT traffic, tools like Mosquitto are invaluable. They generate high-volume traffic, helping you test how well your load balancing setup performs. Finally, configure MetricFire's Hosted Graphite as your external storage and visualization tool for balanced IoT metrics. This ensures long-term data retention and ease of monitoring.

Once your prerequisites are in place, you can move forward with deploying Kubernetes services for IoT metrics.

Deploying Kubernetes Services for IoT Metrics

Kubernetes provides three primary service types for exposing IoT metrics endpoints:

- ClusterIP: The default service type, ideal for internal communication between pods. It exposes the service on an internal IP.

- NodePort: Exposes the service on each node's IP at a static port within the range of 30,000–32,767. This is useful for direct access to nodes.

- LoadBalancer: Best suited for cloud environments, this service provisions an external load balancer to distribute incoming IoT traffic across pods.

Here’s an example of a YAML configuration for a LoadBalancer service:

apiVersion: v1

kind: Service

metadata:

name: iot-metrics-service

spec:

selector:

app: iot-processor

ports:

- protocol: TCP

port: 80

targetPort: 9376

type: LoadBalancer

For IoT use cases requiring device identification or geo-fencing, you can add externalTrafficPolicy: Local to the configuration. This ensures the original source IP of devices is preserved. However, keep in mind that this setting could lead to uneven traffic distribution if pods aren’t evenly spread across nodes. Additionally, you can specify an appProtocol (e.g., kubernetes.io/ws for WebSockets) to optimize the load balancer for IoT-specific protocols.

These configurations help ensure optimal routing of IoT metrics, even in complex multi-cloud setups.

To monitor and analyze your IoT metrics, consider signing up for a free trial of Hosted Graphite or scheduling a demo with MetricFire. This integration makes it easier to track and visualize your metrics effectively.

Kubernetes Service Discovery | Load Balancing | Networking

Implementing Autoscaling for IoT Metrics Pods

Autoscaling plays a critical role in managing fluctuating IoT traffic. It ensures resources are used efficiently by scaling workloads up during traffic spikes and down during quieter periods. The Horizontal Pod Autoscaler (HPA) is a key tool in this process, automatically adjusting pod replicas based on metrics it observes.

The HPA operates on a 15-second sync cycle and calculates the required replicas using this formula: ceil(currentReplicas * (currentMetricValue / desiredMetricValue)). For example, if the current metric value is double the desired target, the HPA will double the number of pods, staying within the configured limits. To make this work, you’ll need to configure HPA with custom IoT metrics effectively.

Preparing Pods for Autoscaling

First, define resource requests in your pod specifications. Without these, the HPA cannot calculate utilization, leaving it inactive. Also, remove the spec.replicas field from your Deployment manifests so the HPA can handle scaling decisions.

To avoid constant scaling adjustments, the HPA applies a 5-minute stabilization window for scaling down. Additionally, it ignores changes when the difference between current and desired metrics is within a 10% tolerance. For IoT workloads prone to bursty traffic, you might want to increase the stabilizationWindowSeconds in the HPA behavior field. This helps maintain sufficient capacity during brief traffic dips.

Configuring HPA with Custom Metrics

While basic autoscaling relies on CPU and memory, IoT workloads often need custom metrics for better responsiveness. Setting up custom metrics requires three components:

- An application that exposes metrics.

- A collection agent like Prometheus to gather those metrics.

- A metrics adapter to make the metrics accessible to the Kubernetes API.

Using Helm, you can install the Prometheus Adapter, which acts as a bridge between Prometheus and Kubernetes, making IoT-specific metrics available to the HPA controller. Ensure your HPA manifest uses the autoscaling/v2 API version, as this supports custom metrics.

Here’s an example HPA configuration for IoT message throughput:

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: iot-metrics-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: iot-processor

minReplicas: 2

maxReplicas: 10

metrics:

- type: Pods

pods:

metric:

name: iot_messages_per_second

target:

type: AverageValue

averageValue: 1000m

In this example, 1000m equals 1 message per second.

Custom metrics can be defined as the Pods type (averaged across all pods) or as the Object type (used for specific resources like an Ingress). For IoT deployments with sidecar containers (e.g., for logging or proxying), consider using ContainerResource metrics. This allows scaling decisions to focus on the primary application container’s usage, excluding the sidecar’s resource consumption.

Troubleshooting HPA

To diagnose HPA-related issues, you can use commands like kubectl get hpa --watch to monitor scaling decisions in real time. Alternatively, kubectl describe hpa provides detailed information, including the status.conditions field, which can help identify health indicators. These tools are indispensable for ensuring your autoscaling setup runs smoothly.

Advanced Load Balancing Techniques for Multi-Cloud IoT Metrics

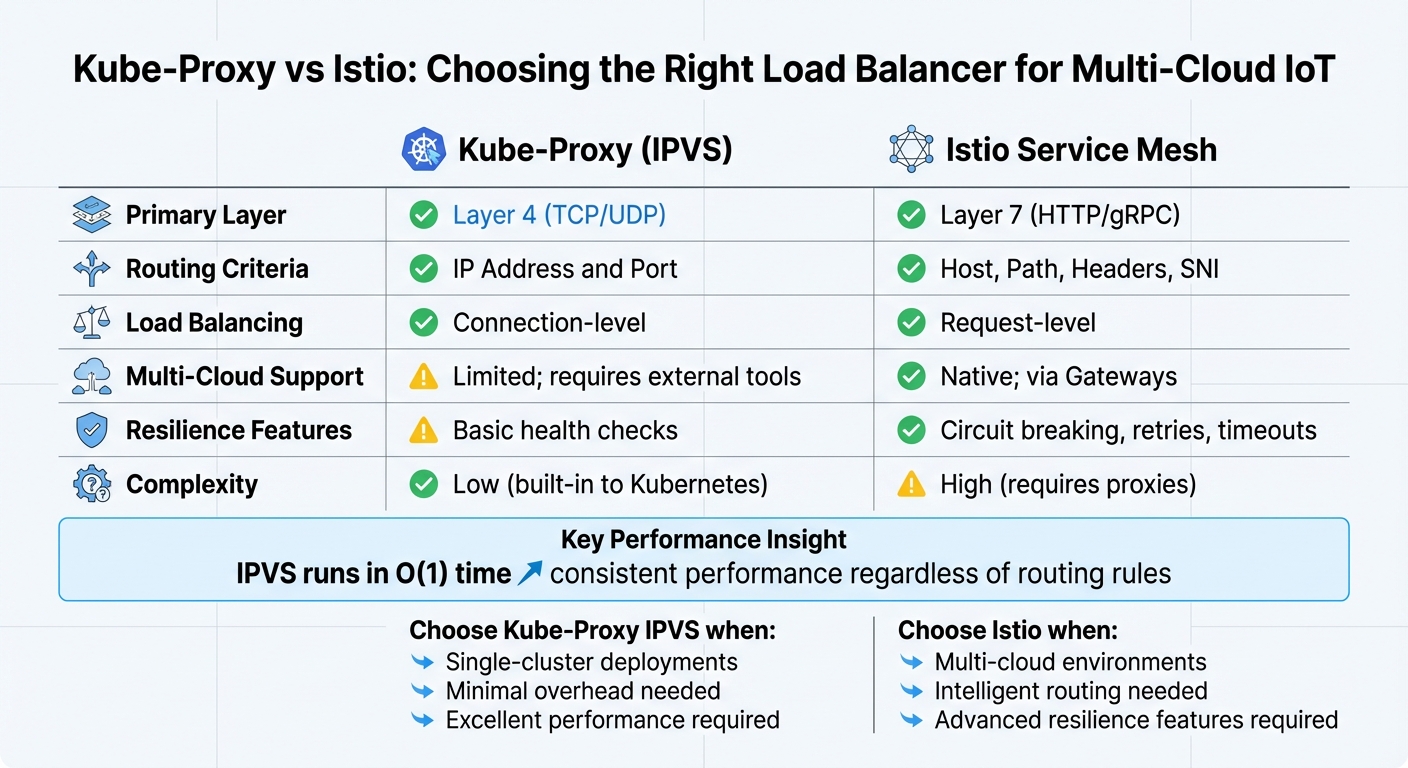

Kube-Proxy vs Istio for Multi-Cloud IoT Load Balancing Comparison

Efficient load balancing plays a critical role in ensuring IoT metrics are delivered on time, especially in multi-cloud setups. After implementing autoscaling, the next hurdle is routing these metrics seamlessly across various cloud providers. While Kubernetes' default load balancing works well within a single cluster, multi-cloud environments demand more sophisticated strategies. Let’s explore how kube-proxy and Istio handle this challenge.

Using Kube-Proxy for Load Balancing

Kube-proxy serves as Kubernetes' built-in load balancer, operating at Layer 4 to manage TCP and UDP traffic based on IP addresses and ports. For large IoT deployments managing thousands of data streams, switching kube-proxy from its default iptables mode to IPVS (IP Virtual Server) mode can significantly boost performance. Unlike iptables, which processes rules one by one, IPVS uses hash tables for near-instant operations, ensuring consistent performance even as the number of services grows.

"The advantage of IPVS over iptables is scalability: no matter how many routing rules are required... IPVS runs in O time." - Kay James

To enable IPVS mode, simply configure kube-proxy with the --proxy-mode=ipvs flag and ensure the necessary kernel modules are loaded. However, kube-proxy is primarily suited for connection-level load balancing within a single cluster. For protocols like gRPC or HTTP/2, which rely on long-lived connections, this can lead to "hot" pods - where some instances handle much more traffic than others. While IPVS enhances internal routing, it lacks the advanced capabilities needed for multi-cloud setups.

Using Istio for Multi-Cloud Load Balancing

Istio elevates load balancing to Layer 7, enabling routing decisions based on HTTP headers, URIs, or gRPC metadata. This approach ensures that traffic is evenly distributed, preventing individual pods from becoming bottlenecks.

By deploying Istio Gateways across providers like AWS, Azure, and GCP, you can create a unified service mesh. This allows services across clouds to function as a single infrastructure. Istio also optimizes traffic routing by prioritizing geographic proximity, reducing latency and cutting down on data transfer costs. If local resources are maxed out, Istio seamlessly redirects traffic to the nearest healthy cluster.

Beyond routing, Istio offers resilience features like circuit breakers, retries, and timeouts - all configurable without altering application code. For example, Istio retries requests once by default, with intervals starting at 25ms. In high-frequency IoT environments, you might need to tweak the default 15-second HTTP timeout to avoid unnecessary delays.

| Feature | Kube-Proxy (IPVS) | Istio Service Mesh |

|---|---|---|

| Primary Layer | Layer 4 (TCP/UDP) | Layer 7 (HTTP/gRPC) |

| Routing Criteria | IP Address and Port | Host, Path, Headers, SNI |

| Load Balancing | Connection-level | Request-level |

| Multi-Cloud Support | Limited; external tools | Native; via Gateways |

| Resilience | Basic health checks | Circuit breaking, retries |

| Complexity | Low (built-in to Kubernetes) | High (requires proxies) |

For single-cluster IoT deployments, IPVS mode in kube-proxy delivers excellent performance with minimal overhead. On the other hand, when handling metrics across multiple clouds with a need for intelligent routing and added resilience, Istio is the go-to solution - despite its higher complexity.

Book a demo to explore how MetricFire can simplify your multi-cloud monitoring strategy.

Integrating MetricFire for IoT Metrics Monitoring

Once you've established effective load balancing with tools like kube-proxy or Istio, the next step is centralizing your IoT metrics for real-time monitoring. MetricFire offers a hosted monitoring platform built on Graphite and Grafana, designed specifically for handling high-volume IoT data streams across multi-cloud environments. By integrating MetricFire, you can complete your IoT metrics pipeline, combining advanced load balancing with centralized monitoring for better visibility and faster issue resolution.

MetricFire simplifies the process by automatically linking Hosted Graphite (for storing metrics) with Hosted Grafana (for visualization). This eliminates the need for manual configuration, making it a great solution for teams managing thousands of IoT sensors across platforms like AWS, Azure, and GCP. With a unified dashboard, you can reduce mean time to resolution (MTTR) from hours to just minutes.

Sending Balanced Metrics to MetricFire

To start, install Prometheus to collect your metrics:

helm install prometheus prometheus-community/prometheus --set grafana.enabled=false --set alertmanager.enabled=false

Configure Prometheus to scrape metrics from your load-balanced IoT pods. Use a ServiceMonitor to target pods labeled app: iot-metrics. Then, update your prometheus.yml file with a remote_write block that points to MetricFire's Graphite endpoint:

remote_write:

- url: https://graphite.metricfire.com:443/api/v1/write?api_key=YOUR_API_KEY

Secure your API key by creating a Kubernetes Secret and mounting it in Prometheus:

kubectl create secret generic metricfire-api --from-literal=key=YOUR_API_KEY

This setup ensures critical metrics - like pod CPU/memory usage, request latency, and error rates - are forwarded to MetricFire, all while maintaining balance across your IoT services. For multi-cloud environments, tag metrics with identifiers such as cluster=aws-eks or cluster=gcp-gke to differentiate traffic sources.

Once your metrics are flowing, you can use MetricFire's Grafana dashboards to visualize and set alerts for IoT performance.

Setting Up Grafana Dashboards and Alerts

MetricFire consolidates metrics from multiple clouds into a single interface, making it easier to manage your distributed IoT infrastructure. Within MetricFire's Grafana interface, you can import pre-built Kubernetes dashboards or create custom panels tailored to your IoT environment. Focus on these key dashboard types:

- Pod Load Distribution: Track request latency, error rates, and resource usage with queries like

sum(rate(http_requests_total{job="iot-metrics"}[5m])) by (pod). - Autoscaling Metrics: Monitor Horizontal Pod Autoscaler (HPA) targets with metrics like

kubernetes_hpa_status_current_replicas{namespace="iot"}. - Multi-Cloud Traffic: Visualize ingress traffic balanced by Istio using

istio_requests_total{reporter="destination"}.

For alerts, configure rules to detect traffic imbalances before they affect performance. For example, set conditions like WHEN avg() OF query(A, 5m, now) IS ABOVE 80%, where query A measures the percentage of traffic per pod relative to the total. You can also set alerts for error rates exceeding 5% or latency surpassing 500ms, with notifications sent via email or Slack. Test these alerts by simulating IoT device surges using load testing tools.

| Metric Category | Key Metrics to Monitor | Purpose |

|---|---|---|

| Pod/Container | CPU/Memory utilization, Restart counts, Throttling % | Identify failing IoT processing pods |

| Node | CPU/Memory usage, Disk I/O, Network traffic (In/Out) | Monitor health of the underlying infrastructure |

| Cluster | Workload overview, Network I/O pressure, Health status | Get a high-level view of IoT metric distribution |

Be mindful of potential pitfalls, like high-cardinality IoT labels, which can cause cardinality explosion. Use Prometheus relabeling to drop unnecessary tags. Additionally, avoid setting scrape intervals above 30 seconds to maintain real-time data accuracy. MetricFire’s Basic Plan offers 24-month data retention at $99/month for 1,000 metrics, allowing you to analyze historical trends without losing critical data.

Ready to get started? Sign up for a free trial at Hosted Graphite or book a demo to explore how MetricFire can meet your monitoring needs.

Testing and Optimizing IoT Metrics Load Balancing

Making sure your load balancing setup can handle IoT traffic patterns effectively is key. Load testing helps you see how your Kubernetes services react to sudden spikes from thousands of devices, while optimization ensures resources aren’t wasted during downtime.

Load Testing Kubernetes Services

To simulate IoT traffic, tools like Iter8 can send concurrent requests to multiple HTTP endpoints, gathering metrics like QPS (queries per second) and latency. For example, in a tutorial using the httpbin service, Iter8 tested three endpoints (/get, /anything, and /post) simultaneously. The results were then visualized using Grafana and Prometheus for easier analysis. If you’re testing NodePort services, remember traffic flows through <NodeIP>:<NodePort>. You can also use the --nodeport-addresses flag in kube-proxy to focus test traffic on specific network interfaces.

To monitor scaling during these tests, use:

kubectl get hpa --watch

The Horizontal Pod Autoscaler (HPA) checks resource usage every 15 seconds by default, with a 5-minute stabilization window for downscaling. This prevents erratic pod count changes, often called "thrashing". For IoT workloads that experience bursty traffic, increasing stabilizationWindowSeconds to 300 seconds or more can smooth out scaling actions. You can also use the Prometheus Blackbox exporter to test the availability of your metric ingestion endpoints (via HTTP, HTTPS, DNS, TCP, or gRPC) under heavy loads.

In a Google Cloud tutorial from 2026, testers used the "Bank of Anthos" sample app to scale the contacts deployment to zero replicas. This verified that Prometheus liveness probes and Alertmanager correctly flagged the service as unavailable and triggered Slack alerts. You can apply this same strategy to your IoT metrics pods - manually scale deployments to zero and check that traffic redistributes properly and monitoring systems respond as expected.

These tests lay the groundwork for fine-tuning your resource allocation.

Optimizing Resource Utilization

Once you’ve gathered insights from load testing, adjust your resource settings to improve performance. For IoT traffic, incorporating metrics like packets-per-second or queue_messages_ready into scaling decisions can help handle sudden sensor bursts effectively.

Set precise resource requests for your containers, as these are critical for accurate HPA calculations. If your IoT apps experience CPU spikes during startup, configure --horizontal-pod-autoscaler-cpu-initialization-period to 5 minutes. This prevents the HPA from scaling based on misleadingly high CPU usage during initialization. By default, the HPA only scales if metrics deviate by more than 10% (tolerance of 0.1) from the target, avoiding unnecessary scaling for minor changes.

| HPA Parameter | Default Value | Purpose |

|---|---|---|

--horizontal-pod-autoscaler-sync-period |

15 seconds | How often metrics are checked |

stabilizationWindowSeconds (Scale Down) |

300 seconds | Prevents rapid pod removal during metric fluctuations |

tolerance |

0.1 (10%) | Ignores minor metric changes to avoid frequent scaling |

initial-readiness-delay |

30 seconds | Wait time before considering pod metrics for scaling |

For large IoT setups with over 1,000 backend endpoints, consider switching from the legacy Endpoints API to the EndpointSlice API. This API organizes endpoints into slices of 100 by default, improving load balancing efficiency. Additionally, set up readinessProbes and startupProbes to ensure traffic only routes to pods ready to process metrics. When scaling, focus on container-specific resource metrics, ignoring heavy sidecars like logging agents or service mesh proxies that could skew results.

If you need tailored advice on fine-tuning your IoT metrics load balancing, consider reaching out to a MetricFire expert for a demo.

Conclusion

Kubernetes offers powerful tools to scale IoT metrics effectively across multi-cloud setups. One standout feature, Topology Aware Routing, reduces latency and cross-zone data costs by ensuring traffic stays within the originating node or zone. This aligns with findings from academic research:

"As computation shifts from the cloud to the edge to reduce processing latency and network traffic, the resulting Computing Continuum (CC) creates a dynamic environment where meeting strict Quality of Service (QoS) requirements... becomes challenging." - Ivan Cilic et al., IEEE Graduate Student Member

Pairing Kubernetes with MetricFire simplifies monitoring by removing the need to manage your own infrastructure. With its managed Graphite and Grafana platform, MetricFire provides long-term data storage for trend analysis, multi-channel alerting (via Email, SMS, or Slack), and centralized monitoring across multiple Kubernetes clusters. The ability to track custom metrics allows for smarter autoscaling decisions tailored to your application's specific needs, rather than relying solely on CPU or memory usage. This centralized approach strengthens system resilience, enabling quicker responses to performance issues.

By combining Kubernetes' orchestration capabilities with MetricFire's monitoring insights, you can build a robust, high-performing IoT metrics stack. Prioritize monitoring the four golden signals - Latency, Traffic, Errors, and Saturation - to maintain visibility at every level, from clusters to individual applications. With 84% of organizations now running containers in production, adopting these strategies ensures your IoT metrics infrastructure remains reliable and cost-efficient.

Ready to get started? Sign up for a free trial at Hosted Graphite or book a demo to discuss your monitoring needs with the MetricFire team.

FAQs

When should I use Istio instead of kube-proxy for IoT metrics?

For basic load balancing and traffic forwarding in Kubernetes, kube-proxy is a solid choice. It keeps traffic flowing efficiently by leveraging tools like iptables or IPVS. This option works best for straightforward IoT setups where simplicity is key.

On the other hand, if your IoT deployment demands advanced features, consider Istio. With its service mesh capabilities, Istio offers enhanced traffic management, robust security, and detailed metrics collection. Features like routing rules and telemetry make it a great fit for managing complex IoT environments that require precise control and deeper visibility.

What custom metric should I use for HPA in an IoT pipeline?

When setting up a Horizontal Pod Autoscaler (HPA) for an IoT pipeline, it's crucial to rely on custom metrics that reflect your application's specific performance needs. Metrics like telemetry data rates, request latency, or queue depth can provide a more accurate picture of your system's behavior.

Here's how to make it work:

- Expose Metrics: Use a monitoring library to surface these custom metrics from your application.

- Collect Metrics: Tools like Prometheus are great for gathering and storing these metrics.

- Integrate with HPA: Use a metrics adapter to convert the collected data into a format that HPA can understand.

This setup allows your system to adjust dynamically, scaling resources in real-time based on IoT data. The result? Efficient resource allocation and smoother performance under varying workloads.

How do I prevent metrics label cardinality from exploding?

To keep metrics label cardinality under control, it's important to take a few key steps:

- Use proper instrumentation at the source: Ensure that the metrics you collect are well-structured and avoid unnecessary labels or dynamic values that can inflate cardinality.

- Normalize dynamic data: Use sanitization processors to standardize or limit unpredictable data, such as user IDs or session tokens, which can otherwise create an overwhelming number of unique labels.

- Enforce system-level cardinality limits: Set hard limits within your system to prevent runaway cardinality from impacting performance.

By following these steps, you can maintain a stable and efficient metrics pipeline, reducing the risk of performance bottlenecks or resource strain.