Table of Contents

- Introduction

- Step 1: What Is NVIDIA DCGM? (and why it beats spot checks)

- Step 2: NVIDIA DCGM Monitoring: Quick Start

- Step 3: Scrape with Telegraf (or Prometheus)

- Step 4: Key DCGM Metrics to Track (cheat sheet)

- Step 5: Alerts That Catch Real Issues

- Step 6: Troubleshooting DCGM Exporter

- GPU Stress Testing with gpu-burn

- Why GPU Servers Consume So Much Power

- FAQs

- Conclusion

Great systems are not just built. They are monitored.

MetricFire is a managed observability platform that helps teams monitor production systems with clean dashboards and actionable alerts. Delivering signal, not noise. Without the operational burden of self-hosting.

Want a quick path to NVIDIA DCGM monitoring? This guide shows how to install the DCGM Exporter, scrape /metrics, visualize in Grafana, and set alerts for power, errors, and utilization.

Introduction

GPUs aren’t just for graphics anymore; they’ve become the workhorses of modern computing whenever massive process parallelism is needed. Unlike CPUs, which handle a few threads really well, GPUs are built to blast through thousands of operations at once, making them perfect for training AI models, running scientific simulations, or processing huge datasets. The trade-off is that all this horsepower comes with higher power draw, more heat, and memory systems that can bottleneck if you’re not paying attention.

That’s why GPU monitoring matters: without it, you can end up wasting resources by hitting thermal throttling or even pushing your hardware into failure.

In this guide, we’ll walk through how to set up NVIDIA’s DCGM Exporter to collect GPU metrics, and use Telegraf to scrape and forward them to MetricFire for storage and visualization.

Use MetricFire's Hosted Graphite platform to analyze your system's performance and troubleshoot errors. Book a demo with our team for more detailed information about MetricFire and how to integrate it with your system; sign up for a MetricFire free trial to get started with seeing your GPU’s vital signs.

Step 1: What Is NVIDIA DCGM? (and why it beats spot checks)

Most GPU servers ship with NVIDIA's System Management Interface (SMI), a built-in command-line tool for checking performance and health stats. Popular GPUs that include this include the Titan Series (Titan RTX, Titan V, Titan Xp) and the RTX 30XX Series (RTX 3060, RTX 3070, RTX 3080, RTX 3090).

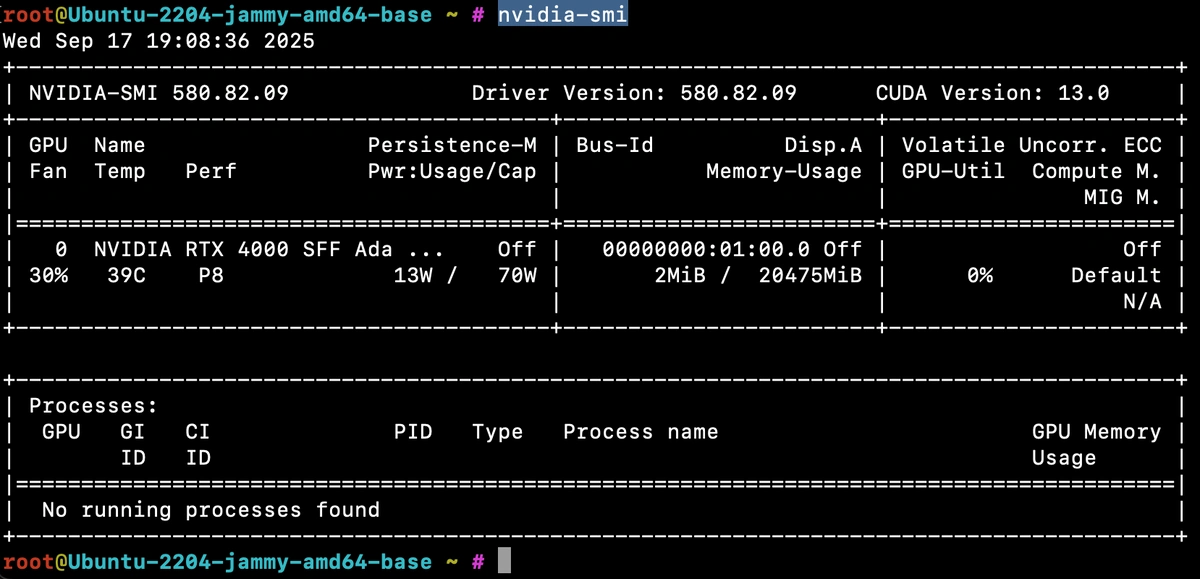

Running nvidia-smi shows you real-time usage details like GPU utilization, memory consumption, temperature, and power draw—it’s the quickest way to confirm your GPU is alive and working.

For testing, we spun up a Hetzner GEX44 GPU server (running Ubuntu 22.04) and used it to explore how to capture and visualize GPU metrics for long-term performance tracking.

While SMI is great for spot checks, it doesn’t provide a continuous stream of metrics for dashboards and alerts. That’s where NVIDIA DCGM (Data Center GPU Manager) comes in—it exposes the same low-level telemetry over an HTTP endpoint that can be scraped by Telegraf, OpenTelemetry, or Prometheus, and stored in MetricFire’s Hosted Graphite backend for long-term monitoring.

Step 2: NVIDIA DCGM Monitoring: Quick Start

Install DCGM + dcgm-exporter

NVIDIA’s Data Center GPU Manager (DCGM) is a low-level toolkit for monitoring and managing GPUs in server environments. It exposes detailed telemetry—utilization, memory, temperature, power, reliability counters—that you wouldn’t get from a simple spot-check tool like nvidia-smi.

On its own, DCGM provides the runtime and APIs to access GPU stats. When paired with the DCGM Exporter, those metrics are made available over HTTP in Prometheus format so they can be scraped by collectors like Telegraf, OpenTelemetry, or Prometheus itself.

Setup commands (Ubuntu 22.04):

Add the NVIDIA CUDA/DCGM repo which installs NVIDIA's keyring and adds their official repository, so apt can fetch packages on Ubuntu 22:

wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2204/x86_64/cuda-keyring_1.1-1_all.deb

sudo dpkg -i cuda-keyring_1.1-1_all.deb

sudo apt-get update

Install DCGM runtime to provide the low-level host engine and libraries that expose GPU stats. It's required by dcgm-exporter to talk to the NVIDIA driver and collect metrics:

sudo apt-get install -y datacenter-gpu-manager

Install and start DCGM-Exporter (via Snap). The exporter runs a small HTTP server on port 9400, exposing GPU stats in the Prometheus format so collectors (like Telegraf) can scrape them:

sudo snap install dcgm

sudo snap start dcgm.dcgm-exporter

Verify your metrics endpoint:

Verify the metrics endpoint to confirm that the exporter is running by querying the metrics endpoint. You will see GPU temperature, power usage, clock speeds, and more:

curl localhost:9400/metrics | head -20

Step 3: Scrape with Telegraf (or Prometheus)

If you don't already have an instance of Telegraf running in your server, install our handy HG-CLI tool to quickly install/configure Telegraf:

curl -s "https://www.hostedgraphite.com/scripts/hg-cli/installer/" | sudo sh

NOTE: You will need to input your Hosted Graphite API key, and follow the prompts to select which metric sets you want. The CLI tool automatically configures the output to your Hosted Graphite account!

Once it's installed, open the Telegraf configuration file at: /etc/telegraf/telegraf.conf and add the following section so Telegraf scrape the metrics endpoint:

[[inputs.prometheus]]

urls = ["http://localhost:9400/metrics"]

Visualize in Grafana (MetricFire dashboards)

Simply save your updated conf file and restart the Telegraf service to forward the GPU performance metrics to your HG account. Or run it manually to inspect the output for potential syntax/permission errors:

telegraf --config /etc/telegraf/telegraf.conf

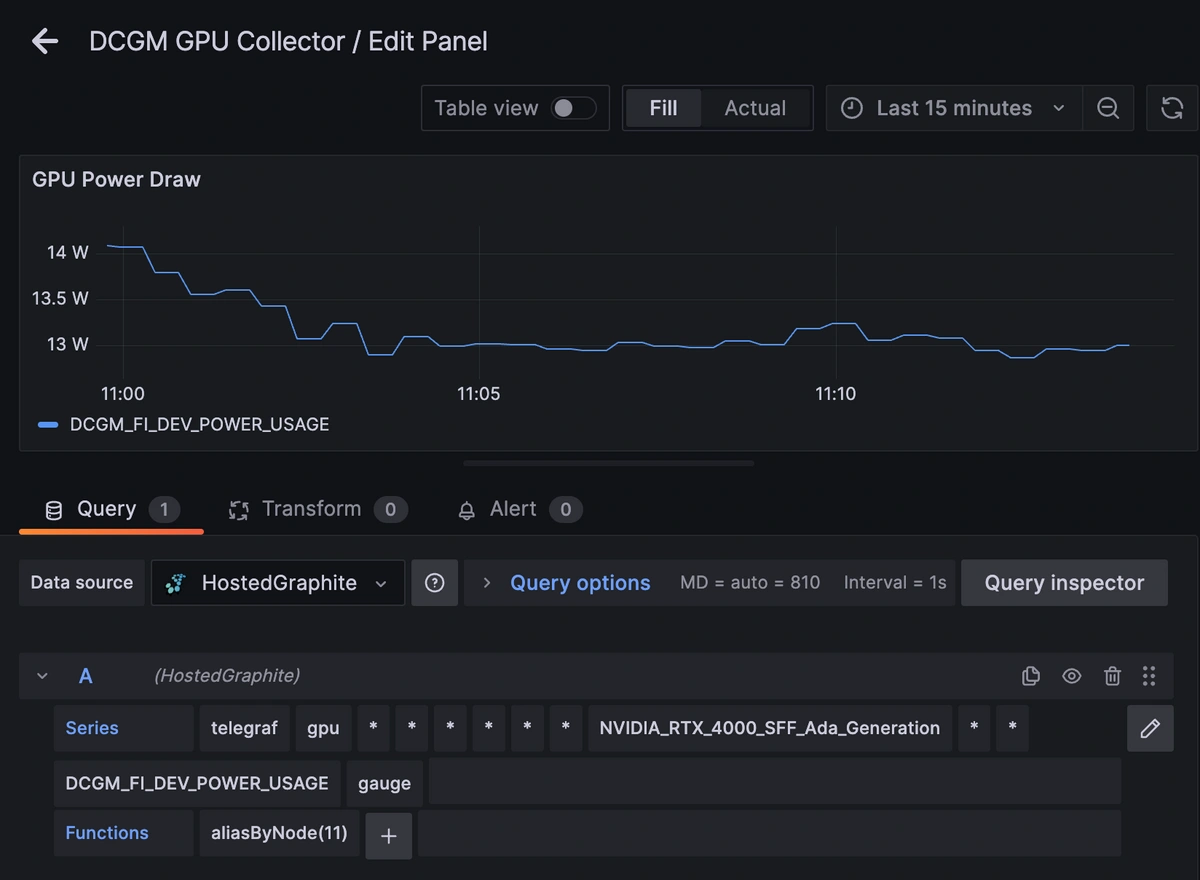

Once these metrics hit your Hosted Graphite account, you can use them to create custom dashboards and alerts!

Once your metrics are in Hosted Graphite, log in to Grafana and start building GPU performance dashboards.

Explore MetricFire Grafana dashboards

Learn more about Telegraf integration

Step 4: Key DCGM Metrics to Track (cheat sheet)

Once the DCGM exporter is up and running, you’ll see a wide range of GPU metrics automatically collected and forwarded to the Hosted Graphite backend. These cover utilization, memory, thermal, power, bandwidth, and even hardware health indicators. Below is an overview of the most useful default metrics that will be collected, along with what each represents and its unit of measurement.

| Metric | Description | Unit |

|---|---|---|

| GPU Utilization (DCGM_FI_DEV_GPU_UTIL) | Core GPU compute engine load | % |

| Power Usage (DCGM_FI_DEV_POWER_USAGE) | Current GPU power draw | W |

| GPU Temperature (DCGM_FI_DEV_GPU_TEMP) | GPU core temperature | °C |

| Memory Temperature (DCGM_FI_DEV_MEMORY_TEMP) | VRAM module temperature | °C |

| Framebuffer Used (DCGM_FI_DEV_FB_USED) | VRAM currently in use | MiB |

| Xid Errors (DCGM_FI_DEV_XID_ERRORS) | Fatal driver-reported error count | count |

| ECC Remapped Rows (DCGM_FI_DEV_CORRECTABLE/UNCORRECTABLE_REMAPPED_ROWS) | Memory health indicators | count |

Reliability Metrics (Remaps, Retries, Errors)

Metrics like remapped rows, PCIe replay counters, and Xid errors are designed as health indicators rather than performance metrics. On a healthy GPU, these values should stay at 0. A nonzero value means the hardware had to correct or retry something it shouldn't have to (like memory rows were remapped due to ECC faults, or PCIe packets were retried due to transmission errors). If you see these counters incrementing, it's a sign of underlying instability like failing VRAM, an unreliable PCIe bus, or a deeper hardware/driver fault. In production, these are "red flag" metrics so any nonzero value warrants investigation.

Step 5: Alerts That Catch Real Issues

Set alerts to detect early signs of degradation:

-

GPU Utilization <10% for >5 min → underuse or stalled jobs

-

Xid Errors >0 → potential driver/hardware instability

-

Power Usage >90% of TDP → possible thermal throttling

-

ECC Remaps increasing → memory degradation

Step 6: Troubleshooting DCGM Exporter

-

Empty /metrics? Ensure

dcgm-exporteris running, and the DCGM runtime matches your driver version. -

Missing ECC fields? Consumer GPUs don’t expose them—expected behavior.

-

Container issues? Use the NVIDIA Container Toolkit when running DCGM in Docker/Kubernetes.

GPU Stress Testing with gpu-burn

gpu-burn is a CUDA-based stress test that pushes the GPU to 100% utilization. It’s commonly used for burn-in testing and validating stability under heavy load.

First, install build tools and CUDA toolkit that is required for compilation:

sudo apt-get update

sudo apt-get install -y git make gcc nvidia-cuda-toolkit

Then, clone the gpu-burn repository and compile the binary:

git clone https://github.com/wilicc/gpu-burn.git

cd gpu-burn

make

Finally, run gpu-burn for 2 min (adjust duration as needed):

./gpu_burn 120

While running, you’ll see spikes in GPU utilization, power draw, and temperature in your DCGM metrics.

Why GPU Servers Consume So Much Power

GPUs are among the most power-hungry components in modern compute infrastructure. Unlike CPUs, which idle down aggressively, GPUs maintain a higher baseline draw just to keep cores and memory ready. Under load, they can spike toward their thermal design power (TDP) hundreds of watts to sustain thousands of parallel compute cores and high-bandwidth VRAM simultaneously. Even at idle, memory refresh cycles and driver management consume measurable energy. Over time, that translates into large cumulative energy use, which is exactly what your DCGM energy counter is reporting.

FAQs

Q1. What’s the fastest way to start NVIDIA DCGM monitoring?

Install DCGM (datacenter-gpu-manager) and dcgm-exporter, verify http://localhost:9400/metrics, then scrape with Telegraf or Prometheus and graph in Grafana.

Q2. Which DCGM metrics should I alert on first?

Start with GPU Utilization, GPU/Memory Temperature, Power Usage, Xid errors, and ECC remap counters; these surface throttling, instability, or failing VRAM early.

Q3. How is DCGM better than nvidia-smi for monitoring?smi is great for spot checks; DCGM exposes a streaming set of fields (utilization, mem, power, reliability) designed for dashboards and alerting.

Q4. Can I run dcgm-exporter on Kubernetes?

Yes—run it as a DaemonSet so each GPU node exposes metrics that your scraper (Prometheus/Telegraf) can collect cluster-wide.

Q5. Why do some ECC metrics not appear on my GPU?

Some consumer GPUs don’t support ECC features; those DCGM fields will be absent by design.

Conclusion

Modern GPU servers are the backbone of AI and HPC. Their efficiency and reliability depend on constant visibility into GPU utilization, temperature, and power. With NVIDIA DCGM Monitoring integrated into MetricFire’s Hosted Graphite via Telegraf, you gain the observability needed to prevent failures, tune performance, and control energy costs.

Modern GPU servers are the backbone of AI and high-performance computing, enabling workloads that would overwhelm even the fastest CPUs. But their strengths also make them complex pieces of hardware, where utilization, memory health, temperature, and power consumption all directly impact reliability and cost. Monitoring them isn't just about chasing performance charts, it's about ensuring workloads run efficiently, keeping infrastructure stable, and avoiding expensive downtime. With tools like the DCGM Exporter and Telegraf integrated into your observability stack, you can treat GPU servers as transparent and measurable systems. In short, if you depend on GPU acceleration, monitoring is not optional. It is the safeguard that ensures those powerful processors keep delivering when you need them most.

Reach out to MetricFire today and learn how their Hosted Graphite product can satisfy your monitoring requirements and give you full visibility into any environment!

Book a demo with MetricFire experts or sign up for the free trial today to learn more about our features.